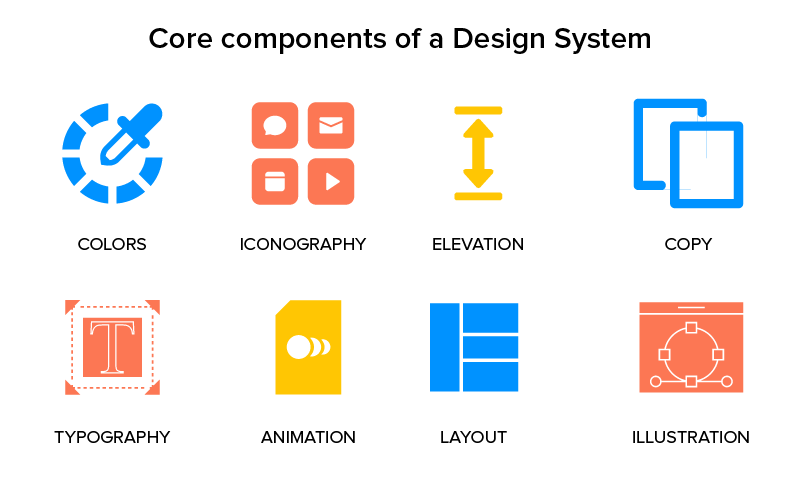

Every scalable and reliable software system is built on a set of core components that work together to handle user requests, process data, and maintain performance under load. Understanding these components is essential for designing systems that can scale efficiently and remain stable as traffic grows.

This blog explains the most important system design components including databases, caching, load balancers, and message queues, along with their roles in modern architectures.

Table of Contents

Role of Core Components in System Design

Core components define how data flows through a system and how requests are handled. Poor choices at this level can lead to performance bottlenecks, downtime, and scalability issues.

According to the system architecture overview by IBM, selecting the right components is critical for building scalable systems.

Databases in System Design

Databases are responsible for storing and retrieving application data. Choosing the right database depends on data structure, consistency requirements, and scalability needs.

Relational databases are commonly used for structured data and strong consistency. The

relational database explanation explains how tables and relationships work together.

NoSQL databases are preferred for large scale, distributed systems. The NoSQL database guide highlights how flexible schemas support scalability.

Database Scaling Strategies

Databases can be scaled vertically by upgrading hardware or horizontally by distributing data across multiple servers. Techniques such as replication and sharding are widely used in large systems. The database scaling strategies explain how modern applications manage growing datasets.

Caching in System Design

Caching improves system performance by storing frequently accessed data in fast storage. It reduces database load and speeds up response times. According to the caching fundamentals by Cloudflare, effective caching significantly improves user experience.

Types of Caching

Client side caching stores data in the user’s browser or device. Server side caching stores data in memory using tools like in memory data stores. The Redis caching overview explains how in memory caching improves performance for high traffic applications.

Cache Invalidation Challenges

One of the biggest challenges with caching is keeping data fresh. Poor cache invalidation strategies can result in outdated information being served to users. The cache invalidation strategies explain how to maintain consistency.

Load Balancers in System Design

Load balancers distribute incoming traffic across multiple servers. This ensures that no single server becomes overloaded and improves system availability. The load balancing concepts by NGINX explain how traffic distribution works.

Benefits of Load Balancing

Load balancing improves fault tolerance, scalability, and performance. If one server fails, traffic is redirected to healthy servers automatically. The AWS load balancing overview highlights how load balancers support high availability.

Message Queues in System Design

Message queues enable asynchronous communication between system components. They help decouple services and handle background tasks efficiently. According to the message queue explanation by RabbitMQ, queues improve system reliability and scalability.

Use Cases of Message Queues

Queues are commonly used for tasks such as email notifications, order processing, and data synchronization. They allow systems to handle traffic spikes without crashing. The event driven architecture guide explains how queues support scalable systems.

Combining Core Components Effectively

Modern systems often combine databases, caches, load balancers, and queues to achieve optimal performance. Each component addresses a specific challenge and works together to support scalability. The system design best practices explain how components fit into overall architecture.

Real World Example

Large platforms such as social media and e commerce systems rely on these components to handle millions of users. Databases store data, caches speed up reads, load balancers distribute traffic, and queues manage background processing. The Uber engineering blog provides real world insights into large scale system design.

Conclusion

Databases, caching, load balancers, and message queues form the backbone of modern system design. Understanding how these components work and interact is essential for building scalable, reliable, and high performance applications.

By selecting the right components and applying best practices, engineers can design systems that grow smoothly and handle real world demands efficiently.

Also Check Introduction to System Design – Popular Guide 2025

1 thought on “Core Components of System Design – Super Ultimate Guide 2025”